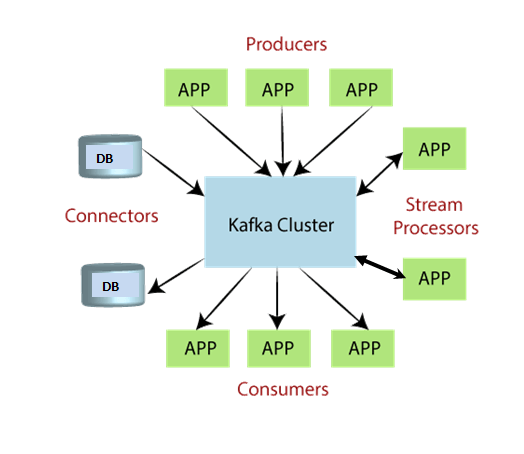

Apache Kafka Tutorial Apache Kafka Tutorial provides the basic and advanced concepts of Apache Kafka. This tutorial is designed for both beginners and professionals. Apache Kafka is an open-source stream-processing software platform which is used to handle the real-time data storage. It works as a broker between two parties, i.e., a sender and a receiver. It can handle about trillions of data events in a day. Apache Kafka tutorial journey will cover all the concepts from its architecture to its core concepts. What is Apache KafkaApache Kafka is a software platform which is based on a distributed streaming process. It is a publish-subscribe messaging system which let exchanging of data between applications, servers, and processors as well. Apache Kafka was originally developed by LinkedIn, and later it was donated to the Apache Software Foundation. Currently, it is maintained by Confluent under Apache Software Foundation. Apache Kafka has resolved the lethargic trouble of data communication between a sender and a receiver. What is a messaging systemA messaging system is a simple exchange of messages between two or more persons, devices, etc. A publish-subscribe messaging system allows a sender to send/write the message and a receiver to read that message. In Apache Kafka, a sender is known as a producer who publishes messages, and a receiver is known as a consumer who consumes that message by subscribing it. What is Streaming processA streaming process is the processing of data in parallelly connected systems. This process allows different applications to limit the parallel execution of the data, where one record executes without waiting for the output of the previous record. Therefore, a distributed streaming platform enables the user to simplify the task of the streaming process and parallel execution. Therefore, a streaming platform in Kafka has the following key capabilities:

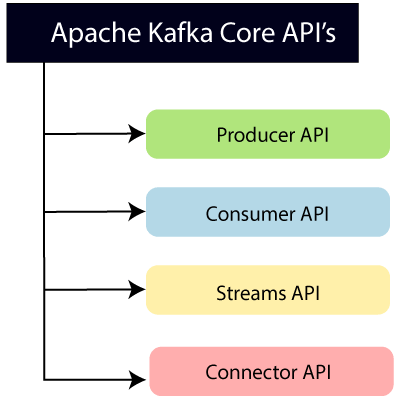

To learn and understand Apache Kafka, the aspirants should know the following four core APIs :  Producer API: This API allows/permits an application to publish streams of records to one or more topics. (discussed in later section) Consumer API: This API allows an application to subscribe one or more topics and process the stream of records produced to them. Streams API: This API allows an application to effectively transform the input streams to the output streams. It permits an application to act as a stream processor which consumes an input stream from one or more topics, and produce an output stream to one or more output topics. Connector API: This API executes the reusable producer and consumer APIs with the existing data systems or applications. Why Apache Kafka Apache Kafka is a software platform that has the following reasons which best describes the need of Apache Kafka.

PrerequisiteThe aspirants should have basic knowledge of Java programming, and some knowledge of Linux commands. AudienceThis Apache Kafka journey is designed for beginners, developers, and people who wish to learn new things. ProblemsWe assure that you will not find any problem in this Apache KafkaTutorial. But if there is any mistake, please post the problem in a contact form. Next TopicKafka Topics |