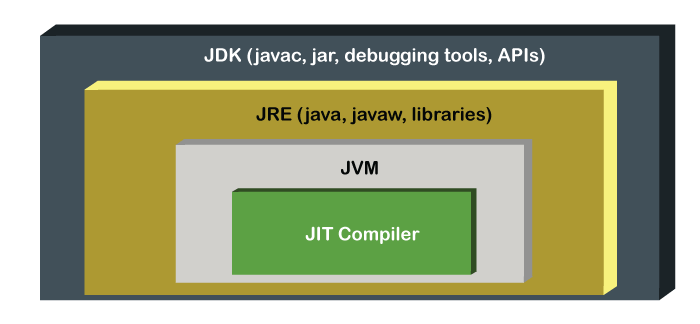

JIT in JavaWhen we write a program in any programming language it requires converting that code in the machine-understandable form because the machine only understands the binary language. According to the programming languages, compiler differs. The compiler is a program that converts the high-level language to machine level code. The Java programming language uses the compiler named javac. It converts the high-level language code into machine code (bytecode). JIT is a part of the JVM that optimizes the performance of the application. JIT stands for Java-In-Time Compiler. The JIT compilation is also known as dynamic compilation. In this section, we will learn what is JIT in Java, its working, and the phases of the JIT compiler. What is JIT in Java?JIT in Java is an integral part of the JVM. It accelerates execution performance many times over the previous level. In other words, it is a long-running, computer-intensive program that provides the best performance environment. It optimizes the performance of the Java application at compile or run time.

The JIT compilation includes two approaches AOT (Ahead-of-Time compilation) and interpretation to translate code into machine code. AOT compiler compiles the code into a native machine language (the same as the normal compiler). It transforms the bytecode of a VM into the machine code. The following optimizations are done by the JIT compilers:

Advantages of JIT Compiler

Disadvantages of JIT Compiler

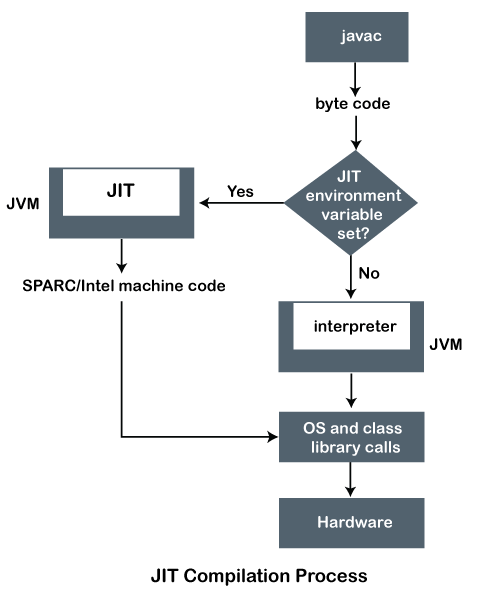

Working of JIT CompilerIf the JIT compiler environment variable is properly set, the JVM reads the .class file (bytecode) for interpretation after that it passes to the JIT compiler for further process. After getting the bytecode, the JIT compiler transforms it into the native code (machine-readable code).

Therefore, the JIT compiler boosts the performance of the native application. We can understand the working of the JIT compiler with the help of the following flow chart.

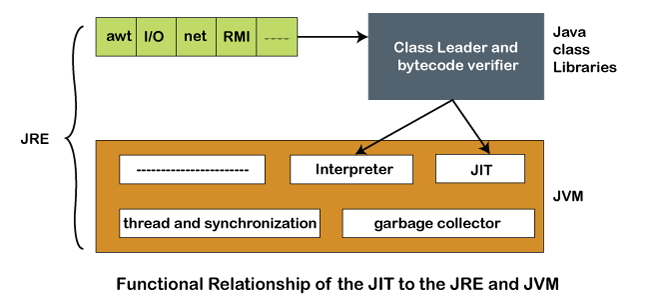

The following figure shows the functional relationship of the JIT compiler with JRE and JVM.

Optimization LevelsIt is also known as the optimization level. Each level provides a certain level of performance. JIT compiler provides the following level of optimization:

The default and initial optimization levels are warm. We get better performance if the optimization level is hotter but it increases the cost in terms of memory and CPU. At the higher optimization level, the virtual machine uses a thread called sampling. It identifies the methods that take a long time to execute. The higher optimization level includes the following techniques:

The above techniques use more memory and CPU to improve the performance of the Java application. It increases the cost of compilation but compensates for performance.

Next TopicHow to Clear Screen in Java

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share