Message Compression in KafkaAs we have seen that the producer sends data to the Kafka in the text format, commonly called the JSON format. JSON has a demerit, i.e., data is stored in the string form. This creates several duplicated records to get stored in the Kafka topic. Thus, it occupies much disk space. Consequently, it is required to reduce disk space. This can be done by compressing or lingering the data before sending it to the Kafka. Need for Message CompressionThere can be the following reasons which better describes the need to reduce the message size:

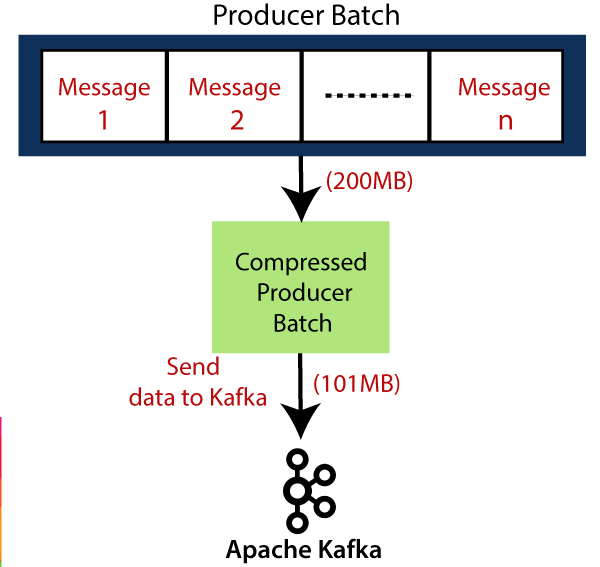

Producer Batch/Record BatchA producer writes messages to the Kafka, one by one. Therefore, Kafka plays smartly. It waits for the messages that are being produced to Kafka. Then, it creates a batch and put the messages into it, until it becomes full. Then, send the batch to the Kafka. Such type of batch is known as a Producer Batch. The default batch size is 16KB, and the maximum can be anything. Large is the batch size, more is the compression, throughput, and efficiency of producer requests.  Note: The message size should not exceed the batch size. Otherwise, the message will not be batched. Also, the batch is allocated per partitions, so do not set it to a very high number.Bigger is the producer batch, effective to use the message compression technique. Message Compression FormatMessage Compression is always done at the producer side, so there is no requirement to change the configurations at the consumer or broker side.  In the figure, a producer batch of 200 MB is created. After compression, it is reduced to 101 MB. To compress the data, a 'compression.type' is used. This lets users decide the type of compression. The type can be 'gzip', 'snappy', 'lz4', or 'none'(default). The 'gzip' has the maximum compression ratio. Disadvantages of Message CompressionThere are following disadvantages of the message compression:

Thus, message compression is a better option to reduce the disk load. Next TopicKafka Security |