TensorFlow MobileTensorFlow Mobile is mainly used for any of the mobile platforms like Android and iOS. It is used for those developers who have a successful TensorFlow model and want to integrate their model into a mobile environment. It is also used for those who are not able to use TensorFlow Lite. Primary challenges anyone can find in integrating their desktop environment model into the mobile environment are:

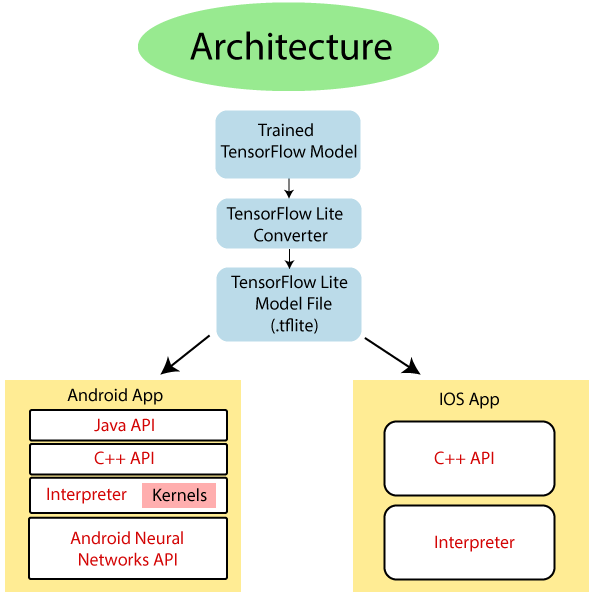

Cases for Using Mobile Machine LearningThe developers associated with TensorFlow use it on high powered GPU's. But it is a very time consuming and costly way to send all device data across a network connection. Running it on any mobile is an easy way to do it.  1) Image Recognition in TensorFlow: It is a useful way to detect or get a sense of the image captured with a mobile. If the users are taking photos to know what's in, there can be a way to apply appropriate filters or label them to find them whenever necessary. 2) TensorFlow Speech Recognition: Various applications can build with a speech-driven interface. Many times a user cannot be giving instructions, so streaming it continuously to a server would create a lot of problems. 3) Gesture Recognition in TensorFlow: It is used to control applications with the help of hands or other gestures, through analyzing sensor data. We do this with the help of TensorFlow. Example of Optical character recognition (OCR), Translation, Text classification, Voice recognition, etc. TensorFlow Lite:TensorFlow Lite is the lightweight version that is specially designed for mobile platforms and embedded devices. It provides a machine learning solution to mobile with low latency and small binary size. TensorFlow supports a set of core operators who have been tuned for mobile platforms. It also supports in custom operations in models. TensorFlow Lite tutorial explains a new file format based on Flat Buffers, which is an open-source platform serialization library. It consists of any new mobile interpreter, which is used to keep apps smaller and faster. It uses a custom memory allocator for minimum load and execution latency. Architecture of Tensorflow lite The trained TensorFlow model on the disk can convert into the TensorFlow Lite file format using the TensorFlow Lite converter. Then we use that converted file in the mobile application. For deploying the lite model file:

We may also implement custom kernels using the C++ API. Following are the points regarding TensorFlow Lite It supports a set of operators that have been tuned for mobile platforms. TensorFlow also supports custom operations in models.

TensorFlow Lite Vs. TensorFlow MobileAs we saw what TensorFlow Lite and TensorFlow Mobile are, and how they support TensorFlow in a mobile environment and embedded systems, we know how they differ from each other. The differences between TensorFlow Mobile and TensorFlow Lite are given below:

Next Topic# |