What is Microsoft Azure Functions Premium plan?The Azure Functions Premium plan (also known as the Elastic Premium plan) is a function app hosting option. Other hosting plans are available. Our functions will profit from premium plan hosting in the following ways:

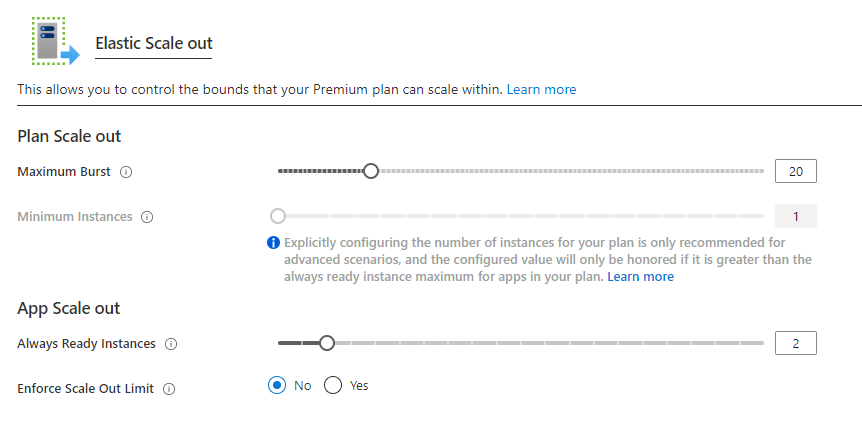

BillingThe billing of the premium plan totally depends on the number of cores being utilized and the memory that is shared among all the instances. The Consumption plan, on the other hand, is invoiced per operation and memory consumed. With the Premium plan, there are no execution fees. In all the plan, or any one of the individual plans at least one instance must be allocated all the time. No more cold startsApp cold start refers to the extra latency experienced on the first call. Always ready instances and pre-warmed instances are two capabilities in the Premium plan that work together to efficiently avoid cold starts in our operations. Always ready instancesWe can have our app ready at all times on a certain number of instances with the Premium package. The maximum number of instances that are always ready is 20. When events start triggering the app, they're sent to the always ready instances first. As the function becomes active, all the additional instances will be warmed up as a buffer. This buffer protects new instances from starting cold during scaling. The buffered instances are those which are pre-warmed. Our program can successfully prevent cold start by combining always ready instances with a pre-warmed buffer.  The Pre-warmed instancesInstances that have been pre-warmed will continue to buffer until the maximum scale-out limit has been met. In most of the circumstances, the pre warmed instance count is set to be 1, and it is recommended to keep it in most of the situation. We may need to raise this buffer if our program takes a long time to warm up (for example, a custom container image). A pre-warmed instance become active only after all the active instances have been sufficiently utilised. Take a look at how always-ready and pre-warmed instances operate together in this example. A premium function app is configured with five always ready instances and one pre-warmed instance by default. We won't be charged for a pre-warmed instance at this time because the always-ready instances aren't being used, and no pre-warmed instance is being assigned. As soon as the first trigger is received, the five always-ready instances become active, and a pre-warmed instance is assigned. SO now , the app is running with six provisioned instances: which includes the five always ready instances that are presently active, and a sixth buffer that is pre-warmed and which was inactive in its state of nature. The five current instances will eventually be used if the rate of executions continues to rise. When the platform reaches a capacity of more than five instances, it switches to the pre-warmed instance. Then as of now, there are now six active instances, and a seventh is provisioned and filled from the pre-warmed buffer. This scaling and pre-warming procedure continues until the app's maximum instance count is reached. There are no instances that are pre-warmed or active beyond their maximum capacity. Maximum function app instances The app scale limit can be used to set the app maximum. Private network connectivityVNet integration for web apps is available for function apps launched to a Premium plan. Now ,our app can communicate with resources without any problem or error within our VNet or securely via service endpoints once they are configured in the proper way. Incoming traffic can also be restricted using IP limitations in the app. We need an IP block with at least 100 addresses accessible. Longer run duration10 minutes is the consumption plan limitation of a single execution of azure function. To prevent runaway executions, the run duration in the Premium plan defaults to 30 minutes. For Premium plan apps, we can change the host.json configuration to make the duration limitless. Our function app can run for at least of 60minute when it is set to the un bounded duration. Plan and SKU settings in the premium plan.There are two plan size settings when creating the plan: the minimum number of instances (or plan size) and the maximum burst limit. We can continue to scale out until the maximum burst limit is reached and if our app requires more instances than there are always-ready instances and in some we scale them up as well. On a per-second basis, we're charged for instances larger than our plan size only while they're operating and allotted to us. The platform tries its hardest to scale our app to the specified maximum limit. At least one instance will be required for each plan. Important The user will be charged for each instance allocated in the minimum instance count which is regardless of whether functions are running or not. In most of the cases this autoscaling feature is very effective and further it does not require any user amendment. Scaling beyond the bare minimum, on the other hand, is a last-ditch effort. If new instances are unavailable at a certain moment, scale-out may be delayed, however this is unusual. Available instance SKUsWe have three instance sizes to pick from when creating or scaling our plan. We will be charged per second for the total number of cores and RAM that each instance is allotted to us. As needed, our software can scale out to several instances automatically.

The Memory usageRunning our function app on a system with more memory does not always imply that it uses all of it. The default memory limit in Node.js, for example, limits the size of a JavaScript function app. Add the app setting languageWorkers:node:arguments with a value of -max-old-space-size=max memory in MB> to increase this fixed memory limit. Azure Functions: Dedicated hosting plansIn traditional hosting, these compute resources are similar to the server farm. One or more function apps can be set up to share computational resources (App Service plan) with other App Service apps like web apps. Consider using an App Service strategy in the following scenarios:

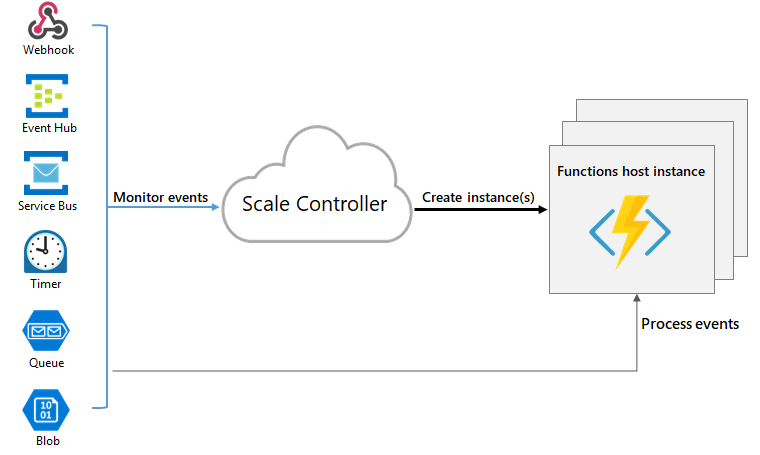

BillingRegardless matter how many function apps or web apps operate in the plan, we are only charged for the plan. Always OnIf we're on an App Service subscription, we should turn on the Always on option to ensure that our function app works properly. The Always on option is only available on App Service plans. The platform automatically activates function apps on a Consumption plan. ScalingWe can manually scale out with an App Service plan by adding new VM instances. We can also enable autoscale, albeit it will be slower than the Premium plan's elastic scale. Must keep in mind that when using an App Service plan to run JavaScript (Node.js) functions, we should select a plan with less vCPUs. App Service EnvironmentsWhen compared to an App Service Plan, running in an App Service Environment (ASE) allows us to fully isolate our functions and take use of a larger number of instances. If we merely want to operate our function app in a virtual network, the Premium plan is for us. Azure Functions expands CPU and memory capacity in the Consumption and Premium plans by adding more Functions host instances. When we delete the function app's main storage account, the function code files are also lost and cannot be restored. Runtime scalingThe scaling controller is a component in Azure Functions that monitors the rate of events and determines whether to scale out or scale in. For each trigger type, the scale controller employs heuristics. The function app is the scale unit for Azure Functions. The scaling controller, on the other hand, removes function host instances as compute demand decreases. When there are no functions that are running inside a function app, then the number of instances is finally "scaled in" to zero.  Cold StartThe platform may reduce the number of instances on which our function app runs to zero after our function app has been idle for a certain number of minutes. Scaling from zero to one adds latency to the next request. A cold start is the term for this period of latency. The number of dependencies that our function app requires can have an impact on the cold start time. Cold start is more of a concern for synchronous processes that must deliver a response, such as HTTP triggers. Understanding scaling behavioursScaling depends on a variety of things, and it varies depending on the trigger and language used. There are a few nuances to scaling behaviours that we should be aware of:

Next TopicWhat is Microsoft Azure Subscription |