SciPy OptimizeThe optimize package provides various commonly used optimization algorithms. This module contains the following aspects:

Nelder- Mead Simplex AlgorithmThe Nelder- Mead Simplex algorithm provides minimize() function which is used for minimization of scalar function of one or more variables. Output:

final_simplex: (array([[ 1. , -1.27109375],

[ 1. , -1.27118835],

[ 1. , -1.27113762]]), array([0., 0., 0.]))

fun: 0.0

message: 'Optimization terminated successfully.'

nfev: 147

nit: 69

status: 0

success: True

x: array([ 1. , -1.27109375])

Least Square MinimizationIt is used to solve the nonlinear least-square problems with bound on the variables. Given the residuals (difference between observed and predicted value of data) f(x) (an n-dimension real function of n real variables) and the loss function rho(s) (a scalar function), least_square finds a local minimum of the cost function f(x): Let's consider the following example: Output:

active_mask: array([0., 0.])

cost: 0.0

fun: array([0., 0.])

grad: array([0., 0.])

jac: array([[-30.00000045, 10. ],

[ -1. , 0. ]])

message: '`gtol` termination condition is satisfied.'

nfev: 4

njev: 4

optimality: 0.0

status: 1

success: True

x: array([1., 1.])

Root Finding

There are four different root-finding algorithms for a single value equation. Each algorithm needs the endpoints of an interval in which a root is expected (because the function changes signs).

The root() function is used to find the root of the nonlinear equation. There are various methods such as hybr (the default) and the Levenberg-Marquardt method from the MINPACK. Let's consider the below equation x2 + 3cos(x)=0 Output:

fjac: array([[-1.]])

fun: array([2.22044605e-16])

message: 'The solution converged.'

nfev: 10

qtf: array([-1.19788401e-10])

r: array([-4.37742564])

status: 1

success: True

x: array([-0.91485648])

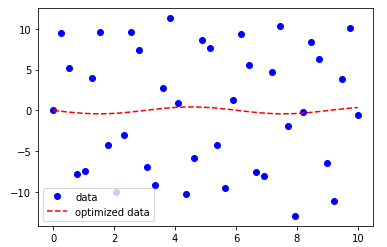

Optimize Curve FittingCurve fitting is the technique of creating a curve. It is a mathematical function that has the best fit to a series of data points, possibly subject to constraints. The example is given below: Output: Sine funcion coefficients: [-0.42111847 1.03945217] Covariance of coefficients: [[3.03920718 0.05918002] [0.05918002 0.43566354]]

SciPy fsolveThe scipy.optimize library provides the fsolve() function, which is used to find the root of the function. It returns the roots of the equation defined by fun(x) = 0 given a starting estimate. Consider the following example: Output: (array([1.]), array([82.17252895]))

Next TopicSciPy Stats

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share