Agent Environment in AI

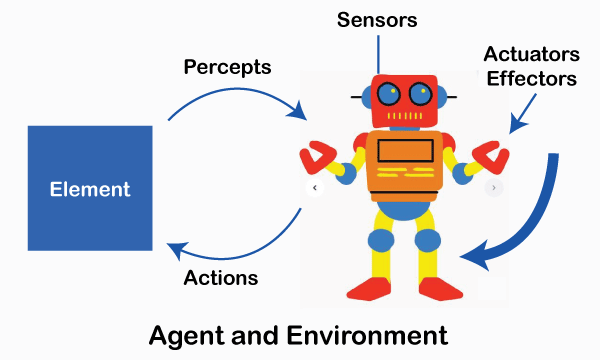

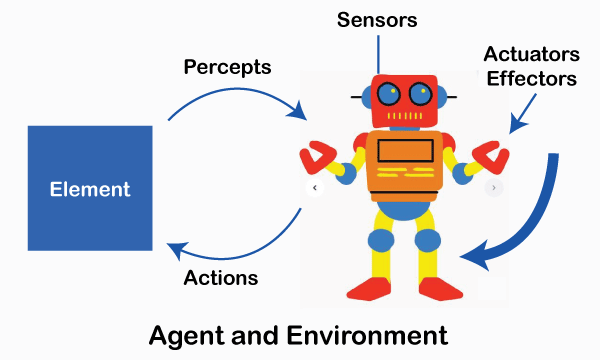

An environment is everything in the world which surrounds the agent, but it is not a part of an agent itself. An environment can be described as a situation in which an agent is present.

The environment is where agent lives, operate and provide the agent with something to sense and act upon it. An environment is mostly said to be non-feministic.

Features of Environment

As per Russell and Norvig, an environment can have various features from the point of view of an agent:

- Fully observable vs Partially Observable

- Static vs Dynamic

- Discrete vs Continuous

- Deterministic vs Stochastic

- Single-agent vs Multi-agent

- Episodic vs sequential

- Known vs Unknown

- Accessible vs Inaccessible

1. Fully observable vs Partially Observable:

- If an agent sensor can sense or access the complete state of an environment at each point in time then it is a fully observable environment, it is partially observable. For reference, Imagine a chess-playing agent. In this case, the agent can fully observe the state of the chessboard at all times. Its sensors (in this case, vision or the ability to access the board's state) provide complete information about the current position of all pieces. This is a fully observable environment because the agent has perfect information about the state of the world.

- A fully observable environment is easy as there is no need to maintain the internal state to keep track of the history of the world. For reference, Consider a self-driving car navigating a busy city. While the car has sensors like cameras, lidar, and radar, it can't see everything at all times. Buildings, other vehicles, and pedestrians can obstruct its sensors. In this scenario, the car's environment is partially observable because it doesn't have complete and constant access to all relevant information. It needs to maintain an internal state and history to make informed decisions even when some information is temporarily unavailable.

- An agent with no sensors in all environments then such an environment is called unobservable. For reference, think about an agent designed to predict earthquakes but placed in a sealed, windowless room with no sensors or access to external data. In this situation, the environment is unobservable because the agent has no way to gather information about the outside world. It can't sense any aspect of its environment, making it completely unobservable.

2. Deterministic vs Stochastic:

- If an agent's current state and selected action can completely determine the next state of the environment, then such an environment is called a deterministic environment. For reference, Chess is a classic example of a deterministic environment. In chess, the rules are well-defined, and each move made by a player has a clear and predictable outcome based on those rules. If you move a pawn from one square to another, the resulting state of the chessboard is entirely determined by that action, as is your opponent's response. There's no randomness or uncertainty in the outcomes of chess moves because they follow strict rules. In a deterministic environment like chess, knowing the current state and the actions taken allows you to completely determine the next state.

- A stochastic environment is random and cannot be determined completely by an agent. For reference, The stock market is an example of a stochastic environment. It's highly influenced by a multitude of unpredictable factors, including economic events, investor sentiment, and news. While there are patterns and trends, the exact behavior of stock prices is inherently random and cannot be completely determined by any individual or agent. Even with access to extensive data and analysis tools, stock market movements can exhibit a high degree of unpredictability. Random events and market sentiment play significant roles, introducing uncertainty.

- In a deterministic, fully observable environment, an agent does not need to worry about uncertainty.

3. Episodic vs Sequential:

- In an episodic environment, there is a series of one-shot actions, and only the current percept is required for the action. For example, Tic-Tac-Toe is a classic example of an episodic environment. In this game, two players take turns placing their symbols (X or O) on a 3x3 grid. Each move by a player is independent of previous moves, and the goal is to form a line of three symbols horizontally, vertically, or diagonally. The game consists of a series of one-shot actions where the current state of the board is the only thing that matters for the next move. There's no need for the players to remember past moves because they don't affect the current move. The game is self-contained and episodic.

- However, in a Sequential environment, an agent requires memory of past actions to determine the next best actions. For example, Chess is an example of a sequential environment. Unlike Tic-Tac-Toe, chess is a complex game where the outcome of each move depends on a sequence of previous moves. In chess, players must consider the history of the game, as the current position of pieces, previous moves, and potential future moves all influence the best course of action. To play chess effectively, players need to maintain a memory of past actions, anticipate future moves, and plan their strategies accordingly. It's a sequential environment because the sequence of actions and the history of the game significantly impact decision-making.

4. Single-agent vs Multi-agent

- If only one agent is involved in an environment, and operating by itself then such an environment is called a single-agent environment. For example, Solitaire is a classic example of a single-agent environment. When you play Solitaire, you're the only agent involved. You make all the decisions and actions to achieve a goal, which is to arrange a deck of cards in a specific way. There are no other agents or players interacting with you. It's a solitary game where the outcome depends solely on your decisions and moves. In this single-agent environment, the agent doesn't need to consider the actions or decisions of other entities.

- However, if multiple agents are operating in an environment, then such an environment is called a multi-agent environment. For reference, A soccer match is an example of a multi-agent environment. In a soccer game, there are two teams, each consisting of multiple players (agents). These players work together to achieve common goals (scoring goals and preventing the opposing team from scoring). Each player has their own set of actions and decisions, and they interact with both their teammates and the opposing team. The outcome of the game depends on the coordinated actions and strategies of all the agents on the field. It's a multi-agent environment because there are multiple autonomous entities (players) interacting in a shared environment.

- The agent design problems in the multi-agent environment are different from single-agent environments.

5. Static vs Dynamic:

- If the environment can change itself while an agent is deliberating then such an environment is called a dynamic environment it is called a static environment.

- Static environments are easy to deal with because an agent does not need to continue looking at the world while deciding on an action. For reference, A crossword puzzle is an example of a static environment. When you work on a crossword puzzle, the puzzle itself doesn't change while you're thinking about your next move. The arrangement of clues and empty squares remains constant throughout your problem-solving process. You can take your time to deliberate and find the best word to fill in each blank, and the puzzle's state remains unaltered during this process. It's a static environment because there are no changes in the puzzle based on your deliberations.

- However, for a dynamic environment, agents need to keep looking at the world at each action. For reference, Taxi driving is an example of a dynamic environment. When you're driving a taxi, the environment is constantly changing. The road conditions, traffic, pedestrians, and other vehicles all contribute to the dynamic nature of this environment. As a taxi driver, you need to keep a constant watch on the road and adapt your actions in real time based on the changing circumstances. The environment can change rapidly, requiring your continuous attention and decision-making. It's a dynamic environment because it evolves while you're deliberating and taking action.

6. Discrete vs Continuous:

- If in an environment, there are a finite number of percepts and actions that can be performed within it, then such an environment is called a discrete environment it is called a continuous environment.

- Chess is an example of a discrete environment. In chess, there are a finite number of distinct chess pieces (e.g., pawns, rooks, knights) and a finite number of squares on the chessboard. The rules of chess define clear, discrete moves that a player can make. Each piece can be in a specific location on the board, and players take turns making individual, well-defined moves. The state of the chessboard is discrete and can be described by the positions of the pieces on the board.

- Controlling a robotic arm to perform precise movements in a factory setting is an example of a continuous environment. In this context, the robot arm's position and orientation can exist along a continuous spectrum. There are virtually infinite possible positions and orientations for the robotic arm within its workspace. The control inputs to move the arm, such as adjusting joint angles or applying forces, can also vary continuously. Agents in this environment must operate within a continuous state and action space, and they need to make precise, continuous adjustments to achieve their goals.

7. Known vs Unknown

- Known and unknown are not actually a feature of an environment, but it is an agent's state of knowledge to perform an action.

- In a known environment, the results of all actions are known to the agent. While in an unknown environment, an agent needs to learn how it works in order to perform an action.

- It is quite possible for a known environment to be partially observable and an Unknown environment to be fully observable.

- The opening theory in chess can be considered as a known environment for experienced chess players. Chess has a vast body of knowledge regarding opening moves, strategies, and responses. Experienced players are familiar with established openings, and they have studied various sequences of moves and their outcomes. When they make their initial moves in a game, they have a good understanding of the potential consequences based on their knowledge of known openings.

- Imagine a scenario where a rover or drone is sent to explore an alien planet with no prior knowledge or maps of the terrain. In this unknown environment, the agent (rover or drone) has to explore and learn about the terrain as it goes along. It doesn't have prior knowledge of the landscape, potential hazards, or valuable resources. The agent needs to use sensors and data it collects during exploration to build a map and understand how the terrain works. It operates in an unknown environment because the results and consequences of its actions are not initially known, and it must learn from its experiences.

8. Accessible vs Inaccessible

- If an agent can obtain complete and accurate information about the state's environment, then such an environment is called an Accessible environment else it is called inaccessible.

- For example, Imagine an empty room equipped with highly accurate temperature sensors. These sensors can provide real-time temperature measurements at any point within the room. An agent placed in this room can obtain complete and accurate information about the temperature at different locations. It can access this information at any time, allowing it to make decisions based on the precise temperature data. This environment is accessible because the agent can acquire complete and accurate information about the state of the room, specifically its temperature.

- For example, Consider a scenario where a satellite in space is tasked with monitoring a specific event taking place on Earth, such as a natural disaster or a remote area's condition. While the satellite can capture images and data from space, it cannot access fine-grained information about the event's details. For example, it may see a forest fire occurring but cannot determine the exact temperature at specific locations within the fire or identify individual objects on the ground. The satellite's observations provide valuable data, but the environment it is monitoring (Earth) is vast and complex, making it impossible to access complete and detailed information about all aspects of the event. In this case, the Earth's surface is an inaccessible environment for obtaining fine-grained information about specific events.

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now